One of the most difficult and time consuming parts of maintaining a perimeter is reviewing firewall logs. It’s not uncommon for an organization to generate 50, 100, 500 MB or more worth of firewall log entries on a daily basis. The task is so daunting in fact, that many administrators choose to ignore their logs. In this series I’ll show you how to expedite the firewall log review process so that you can complete it faster than that morning cup of coffee.

Why firewall log review is important

I once took part in a panel discussion where one of my fellow SANS instructors announced to the crowd “the perimeter is dead and just short of useless”. I remember thinking I was glad I was not one of his students. I occasionally take on new clients and find that 7/10 times I can identify at least one compromised system they did not know about. In every case it has been the client’s own firewall logs that pointed me to the infected system.

In the old days firewall log review was all about checking your inbound drop entries to look for port scans. Today the focus is on outbound traffic. Specifically, you should be checking permitted patterns. With the plethora of non-signature Malware today it has become far too easy for an attacker to get malicious code onto a system. A properly configured perimeter will show you when a compromised system tries to call home. This is typically your best chance to identify when a system has become compromised.

What needs to be logged?

Dropped traffic does not have to be logged provided you are not blind to DoS flood attacks. For example if you are running a tool such as NTOP on your perimeter, collecting RMON or Netflowdata, than it is OK not to log dropped packets as you can collect this information through other means.

When traffic is permitted across the perimeter however, you need to log it. This includes all permitted traffic, regardless of direction (egress as well as ingress). At a minimum we want to see header information for the first packet in a session. Anything beyond that can be considered a bonus.

Some kernel level rootkits do an excellent job of hiding themselves within the infected system. In fact many are so stealthy they cannot be detected by checking the system directly. One possible option is to pull the hard drive and check it from a known to be clean system. Obviously this is highly impractical whenever you have more than just a couple of systems.

A better option is to check the network for tell tale signs of the Malware calling home. Malware typically creates outbound sessions either to transfer a toolkit or check in for marching orders. The firewall is in an optimal position to potentially block, or at the very least log, both of these activity patterns. So by reviewing our firewall logs, we can quickly check every system on our network for indications of a compromise.

Malware can leverage any socket to call home, but most use TCP/80 (HTTP) or TCP/443 (HTTPS). This is because Malware authors know most firewall administrators do not log these outbound sessions because they are responsible for the greatest portion of perimeter traffic. So again, if we are going to permit the traffic to pass our perimeter, we must insure we are logging it.

Log review as a process

The mistake I see most administrators make is they perform a time linear analysis of their log entries looking for “the interesting stuff”. The problem is suspect traffic can be extremely difficult to detect this way as it will be mixed in with normal traffic flow. So the first thing we need to do is get the normal traffic out of the way.

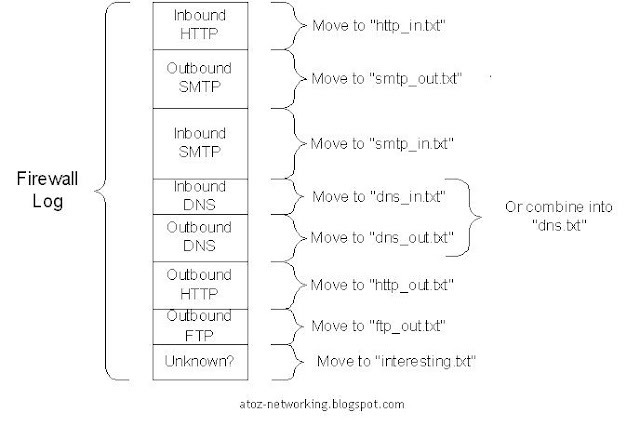

Think of the rectangle in Figure #1 as representing your firewall log. Assume it contains a mixture of normal as well as suspect traffic patterns. Rather than immediately looking for the suspect patterns, let’s first get the normal patterns out of the way. For example HTTP headed to our Web server from the Internet is an expected pattern. If we pull all of these entries out of the log file, the log file becomes a little bit smaller. Inbound and outbound SMTP to our mail server is another expected pattern. Again, if we can remove these entries as well the firewall log file becomes even smaller.

Now we simply continue this process for every traffic pattern we expect to see crossing our perimeter. The more traffic patterns we recognize and move out of the way, the smaller the final log file becomes. What’s left is just the unexpected traffic patterns that require review time from a firewall administrator. I’ve seen sites that typically generate 250-300 MB worth of logs daily end up with a final file less that 100 KB in size. Needless to say 100 KB takes far less time to review that 300 MB.

Automate, automate, automate

If this seems like a lot of work, it only will be initially. What I do is create a batch file, shell script, or set of database queries to automate the process of parsing the firewall log. We can then run this process as a CRON job or scheduled task. This means that all of the hard work (breaking up the main log file into smaller files) can be done off hours. When you walk in the door in the morning, the log file will already be segregated. You can then immediately focus in on the suspect patterns.

Helpful tips

Here are some tips I’ve developed over the years:

- There is no “single right way” to segregate log entries. It is all about how you personally spot unsuspected patterns. You can sort by IP address, port number, or whatever info you have to work with in your logs.

- This is not about obsessively putting one log entry into every sort file. This process is about creating easier to spot patterns. For example a TCP reset in an HTTP stream could go in both an “error” file and an “HTTP” file. Each would make it easier to spot different types of patterns.

- Start by pulling our error packets (TCP resets, ICMP type 3’s & 11’s). They always indicate something is broke or someone did something unexpected.

- A smart attacker will never make your “top 5 communicators” list. I’ve seen infected systems make as few as four outbound connections in a day.

- Make a note of the average size of each of your sort files. A sharp spike in traffic may warrant further investigation.

- Sometimes it is helpful to parse the same pattern into two different files. For example I create an “outbound HTTP” file, and then parse out all of the traffic generate during non-business hours. This makes it much easier to find infected systems calling home.

- Whitelist know patch sites. For example systems may call home all night long to Microsoft and Adobe to check for updated patches. If you can parse out these entries, you’ll end up with far less noise in your final file.

- Some sites find it helpful to parse out users checking their personal email. This can be helpful information if data leakage occurs.

- I like to segregate traffic based on security zone. For example I would be far less concerned about SSH from the internal network to the DMZ, than I would about SSH headed to the Internet.

- In an ideal world, ever traffic pattern you find will be described in your organization’s network usage policy. If its not, then further investigation may be required.

- Expect to tweak your script over time, as networks are an evolving entity.

Exec Summary

White listing expected traffic patterns in your firewall log can help to expedite the log review process. Similar traffic becomes grouped together, and can be more easily checked for suspect patterns. In part 2 of this series I’ll walk you through the process of creating your own script using a number of different firewall products.

Continue to Review A Firewall Log In 15 Min Or Less – Part 2

Comments